About Me

- Angelo

- I'm a colonist who has declared war on machines and intend to conquer them some day. You'll often find me deep in the trenches fighting off bugs and ugly defects in code. When I'm not tappity-tapping at my WMD (also, known as keyboard), you'll find me chatting with friends, reading comics or playing a PC game.

Sunday, June 15, 2008

Firefox Download Day

Hopefully when Firefox 3 sets a world record for the most software downloaded in a 24-hour period, more web-users will sit up and take notice. We are a country with the second largest population in the world. Let's prove that we are also willing to embrace a future of free and open-source software by participating in this important event.

On a side-note, I wouldn't recommend any NIN fan opening http://remix.nin.com with IE6.

Tuesday, June 10, 2008

Sorting Stuff with Heap Sort - Part II

Heap sort begins by building a heap of the data. A heap can be visualized as a binary tree where each parent node is greater than its successors. In practice, the heap can easily be represented by a simple array where the left and right children of the ith node in the array will be at positions 2*i+1 and 2*i+2 respectively. For example, the following is a heap:

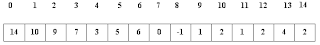

This heap above can be represented using an array as follows:

Note that the ith element has the (2i+1)th and (2i+2)th elements as its children. The fact that the heap can be represented like this means that we can do an in-place sort without having to construct an entirely new data structure for storage. There are a couple of interesting features of the heap which are to be noted:

Note that the ith element has the (2i+1)th and (2i+2)th elements as its children. The fact that the heap can be represented like this means that we can do an in-place sort without having to construct an entirely new data structure for storage. There are a couple of interesting features of the heap which are to be noted:1. Each node is greater than its successors (for sorting in ascending order).

2. By virtue of (1), the largest node will always be on top of the heap.

Now that we know what the heap is, let’s see how we can generate such a heap out of unsorted data.

Building the Heap.

Before we talk about building a heap, we must first discuss a sifting procedure. The sifting procedure basically sifts a given node downward through the tree until it reaches its rightful place in the tree. I may keep using the term ‘node’ simply because its easier to visualize things that way but remember that in practice, a node is nothing but an element in a list. The sifting algorithm is pretty straight-forward.

Procedure SIFT(int siftNode, int heapSize)

1. Start with the position of the node to be sifted.

2. If either of the sift node’s children are larger than it, then swap the sift node with the larger of its children.

3. If neither of the sift node’s children are larger than it or the end of the heap has been reached then exit.

4. Otherwise, go to step 2.

Using the sift procedure, we can easily build an initial heap out of seemingly unsorted data. We start with the last node in the tree or in other words, the last element in the input data list. We then invoke our earlier sift procedure on this element. Then we move onto the previous element in the list and the cycle continues until we have sifted all of the elements in the list. The result will be a nice heap. For grasping the idea better, the following algorithm should suffice. Note that the algorithm assumes a zero based input data list.

Using the sift procedure, we can easily build an initial heap out of seemingly unsorted data. We start with the last node in the tree or in other words, the last element in the input data list. We then invoke our earlier sift procedure on this element. Then we move onto the previous element in the list and the cycle continues until we have sifted all of the elements in the list. The result will be a nice heap. For grasping the idea better, the following algorithm should suffice. Note that the algorithm assumes a zero based input data list.

Procedure Heapify()

1. siftNode = inputDataList.length()-1

2. while siftNode >= 0

{

3. Invoke SIFT( siftNode, inputDataList.length() )

4. siftNode = siftNode – 1

}

Sometimes, building an initial heap out of the input data is known as “Heapifying” the data. We are not done yet. The heap has to now be sorted and we'll see how that can be done soon. Until then, have a nice week!

Thursday, June 5, 2008

Sorting Stuff with Heap Sort - Part I

I’m starting a small series of blog posts that will explain the Heap Sort algorithm in depth. I’ve noticed that this is an academic topic that few are willing to touch but I am! I hope you find this short series informative.

The Basics.

First, lets get through the basics of any sorting algorithm. A sorting algorithm is an algorithm that seeks to arrange the elements of a data structure (think, a simple List) in a particular order. In most cases, we prefer the elements to be sorted in either ascending or descending order. The efficiency of an algorithm can be measured in terms of its Time Complexity or Space Complexity. The term Time Complexity refers to the amount of time that an algorithm takes to process the input and produce the desired output. The term Space Complexity refers to the amount of space or memory that an algorithm takes to produce the expected result. Generally, the complexity of an algorithm (time or space) is expressed as a function of the size of the problem, n. This is known as the Big-Oh Notation. So, if we were to say that a sorting algorithm has a time complexity of O(n), it means that the algorithm will take a factor of 10 units of time to sort 10 values. If the complexity of the algorithm were O(n2), then it would take a factor of 100 units of time to sort 10 values. Note that I have said that the algorithm will take "a factor of" time because this factor would depend on the particular problem. For example, if we were sorting simple integers, we could expect this factor to be a lot less than if we were sorting strings. Also, the complexity of an algorithm can be specified for Best, Worst or Average case scenarios. A best case scenario for a sorting algorithm would, of course, be if the data were already sorted in the first place. A worst case for a sorting algorithm would arise if the data were arranged in a manner that is contrary to the working of the algorithm. Generally, a good algorithm is measured for its worst-case scenario but in some cases, we also consider the average case.

Heap Sort is an O(nLogn) time complexity algorithm. This is in stark contrast with other sorting algorithms such as Bubble Sort (the unjustifiably most famous sort) which has an average or worst case complexity of O(n2). In my next post, we will dive into the Heap Sort algorithm and find out the reason as to why its called Heap Sort in the first place.